AI Tools for Thesis Writing in 2026: Save Time & Improve Quality

Writing a thesis can involve many steps, such as reading academic papers, organising ideas, and formatting references. This process takes time, especially when working with large amounts of research.

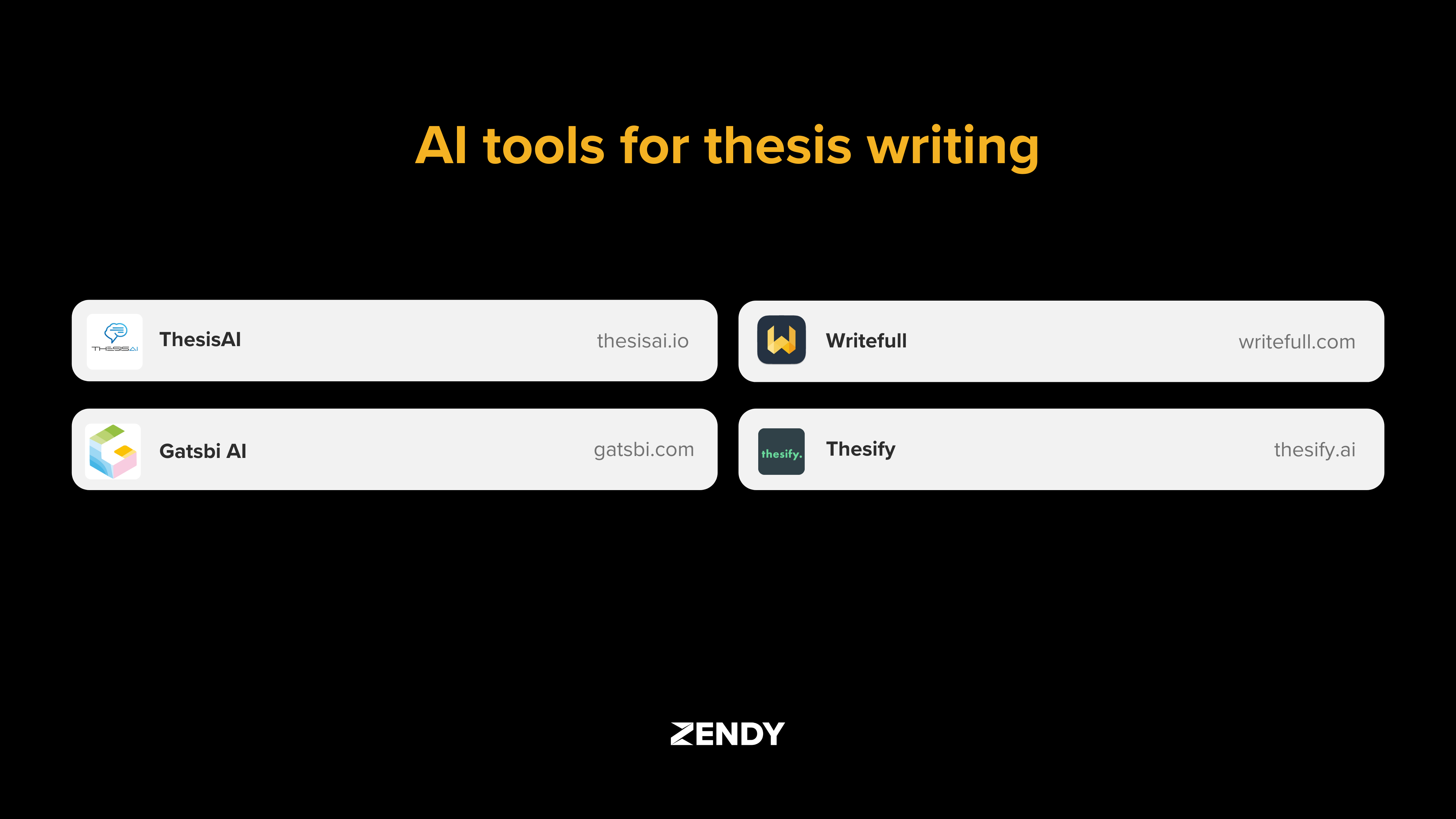

In this blog, we’ll introduce you to some of the best AI tools designed for thesis writing.

These tools don't replace original thinking or writing. Instead, they handle time-consuming tasks so researchers can focus on developing their ideas and arguments.

- Time savings: AI tools can summarise articles in minutes rather than hours, helping researchers review more literature efficiently

- Writing clarity: These tools identify confusing sentences, awkward phrasing, and inconsistencies that might distract readers

- Organisation: Many tools help track sources, organise notes, and maintain consistent formatting throughout long documents

How ThesisAI, Gatsbi, Writefull, And Thesify Enhance Research

Each of these AI tools supports different aspects of thesis writing. When used together, they can help with the entire process from initial research to final editing.

ThesisAI

ThesisAI generate a complete scientific document (up to 50 pages) with a single prompt. Seamlessly integrates with LaTeX, Overleaf, Zotero, and Mendeley for effortless formatting and citation management. Includes automated research capabilities via Semantic Scholar for smart paper discovery. Supports writing in 20+ languages for global academic needs. See examples here.

Gatsbi

Gatsbi helps maintain logical structure throughout a thesis. It analyses how ideas connect across chapters and sections, ensuring the argument flows smoothly from beginning to end.

The tool supports technical elements like equations, citations, and data tables, making it especially useful for scientific writing. Unlike some AI tools, Gatsbi focuses on organising existing content rather than generating new text.

Writefull

Writefull improves academic language by checking grammar, vocabulary, and tone. It integrates with Microsoft Word and Overleaf (for LaTeX documents), providing feedback as you write.

The tool understands discipline-specific language and conventions, offering suggestions that match academic expectations. Its features include abstract generation, title refinement, and paraphrasing options for clearer expression.

Thesify

Thesify evaluates the strength of academic arguments and evidence. Rather than focusing only on grammar, it analyses whether claims are supported, arguments are logical, and ideas are clearly expressed.

The feedback resembles what you might receive from a professor or peer reviewer, with comments on structure, reasoning, and evidence use. This helps identify weaknesses in the argument before submission.

| Tool | Main Purpose | Works Best For | Compatible With | Special Features |

| TheseAI | generating a complete scientific document | Literature reviews | Web browsers | Concept mapping, source comparison |

| Gatsbi | Organising thesis structure | Maintaining logical flow | Web platform | Supports technical elements, citation integration |

| Writefull | Improving academic language | Grammar and style refinement | Word, Overleaf | Real-time feedback, LaTeX support |

| Thesify | Evaluating argument quality | Getting expert-like feedback | Web browsers | Logic assessment, evidence evaluation |

Key Functions of AI Thesis Writing Tools

AI thesis tools typically excel in three main areas: summarising research, improving language, and managing citations. Understanding these functions helps choose the right tool for specific writing challenges.

Research Summaries

AI summarisation tools read academic papers and create concise overviews highlighting key findings, methods, and conclusions. This technique helps researchers quickly grasp the main points without reading entire articles.

For example, when reviewing literature for a psychology thesis, the AI might extract information about study participants, experimental design, and statistical results. This allows researchers to compare multiple studies more efficiently.

However, AI thesis tools raise risks such as academic misconduct, loss of originality, privacy concerns, and inaccurate outputs if misused. University policies differ, so always check regulations, use AI responsibly, and critically review all AI-generated work. These summaries serve as starting points for deeper reading, not replacements for understanding the full text. You still need to verify important details and evaluate the quality of the original research.

Language Improvement

Language tools analyse writing for grammar, clarity, vocabulary, and academic tone. They identify issues like wordiness, passive voice overuse, and unclear phrasing that might confuse readers.

Some tools, like Writefull, understand discipline-specific conventions. They can suggest appropriate terminology for fields like medicine, engineering, or literature, helping writers match the expectations of their academic community.

These suggestions appear as you write or during review, similar to having an editor check your work. The writer maintains control over which changes to accept, ensuring the text still reflects their voice and ideas.

Citation Management

Citation tools format references according to academic styles like APA, MLA, or Chicago. They help maintain consistency throughout the document and ensure all sources are properly acknowledged.

Many tools can generate citations automatically from a DOI, URL, or article title. They also check for missing information and formatting errors that might otherwise be overlooked.

This function helps prevent unintentional plagiarism by making proper attribution easier. It also saves time during the final editing process when references need to be checked and formatted.

How to Use AI Tools Ethically in Academic Writing

Universities increasingly recognise that AI tools can support the writing process, but they distinguish between acceptable assistance and potential academic misconduct.

Acceptable uses typically include grammar checking, citation formatting, and research organisation. These functions help improve presentation without changing the core content or ideas.

Most institutions draw the line at using AI to generate content or develop arguments. The thinking, analysis, and conclusions should come from the student, not from an AI system.

- Be transparent: Many universities now ask students to disclose which AI tools they used and how they were applied in the writing process

- Verify information: AI tools sometimes make mistakes with citations or summaries, so always check against original sources

- Maintain ownership: The ideas, arguments, and conclusions should reflect your understanding, not text generated by an AI

Universities like Cambridge, Oxford, and MIT have published guidelines explaining how students can use AI tools appropriately. These policies typically focus on using AI as an assistant rather than a replacement for original work.

How to Select the Right AI Tool for Your Field

Different academic fields have specific writing conventions and requirements. Choosing tools that understand these differences improves their effectiveness.

Science and Engineering

Science and engineering theses often include technical elements like equations, data tables, and specialised terminology. Tools like Gatsbi and Writefull support these features, including LaTeX formatting commonly used in these fields.

These disciplines typically use structured formats with clearly defined sections (introduction, methods, results, discussion). AI tools can help maintain this structure and ensure each section contains the expected content.

Humanities and Social Sciences

Humanities and social science writing often emphasises argument development, theoretical frameworks, and textual analysis. Tools like Thesify that evaluate argument quality and evidence use are particularly helpful.

These fields may use discipline-specific citation styles like Chicago or MLA. Citation tools that support these formats help maintain proper attribution of sources, especially when working with primary texts and archival materials.

Interdisciplinary Research

Interdisciplinary theses combine methods and conventions from multiple fields. This can create challenges when using AI tools designed for specific disciplines.

Researchers working across disciplines may benefit from using multiple tools together. For example, using Writefull for language improvement while using Thesify for feedback on argument structure and evidence.

Practical Integration of AI Tools in Thesis Writing

Adding AI tools to your writing process works best with a thoughtful approach. Starting small and gradually expanding tool use helps avoid overwhelming changes to established work habits.

Begin with One Chapter

Testing an AI tool on a single thesis chapter or section provides a clear sense of its benefits and limitations. This approach allows for comparison between AI-assisted and regular writing processes.

After completing the test section, evaluate whether the tool improved quality, saved time, or created new challenges. This information helps decide whether to continue using the tool for the full thesis.

Create Clear Boundaries

Deciding in advance which tasks you'll use AI for helps maintain academic integrity. For example, you might use AI for grammar checking and citation formatting but not for generating content or developing arguments.

These boundaries ensure the thesis remains your own intellectual work while still benefiting from technological assistance with mechanical aspects of writing.

Combine Complementary Tools

Different tools excel at different tasks. Using them together creates a more complete support system for thesis writing.

A sample workflow might include:

- Using TheseAI to gather and summarise research for the literature review

- Organising the thesis structure with Gatsbi to ensure logical flow

- Improving language and style with Writefull during drafting

- Getting feedback on argument quality with Thesify before submission

This approach uses each tool for its strengths while avoiding over-reliance on any single program.

The Future of AI in Thesis Writing

AI tools for academic writing continue to evolve, becoming more specialised and integrated with research workflows. Current trends suggest several developments on the horizon.

These tools increasingly understand discipline-specific conventions and terminology. This specialisation helps them provide more relevant feedback for different academic fields.

Integration between research platforms and writing tools is also improving. This allows researchers to move smoothly between finding sources, taking notes, drafting content, and formatting references.

As these tools develop, access to quality academic content remains essential. Zendy's AI-powered research library offers access to peer-reviewed articles that complement AI writing tools, creating a more complete research environment.

Frequently Asked Questions About AI Thesis Writing Tools

How do AI thesis writing tools protect my data and research?

Most academic AI tools have privacy policies stating they don't use uploaded content to train their models and maintain confidentiality of research materials, though specific protections vary by platform.

Do universities allow students to use AI tools for thesis writing?

Many universities permit AI tools for editing, citation formatting, and grammar checking, but typically require original thinking and content creation from the student; check your institution's specific guidelines.

How do TheseAI, Gatsbi, Writefull, and Thesify differ from general AI like ChatGPT?

These specialised academic tools understand scholarly conventions, integrate with research workflows, and focus on specific aspects of thesis writing rather than generating general content like ChatGPT.

Can AI thesis tools help with discipline-specific terminology?

Yes, tools like Writefull and Thesify recognise field-specific terminology and academic conventions across disciplines, offering more relevant suggestions than general writing tools.

Will AI tools for thesis writing improve my research quality?

AI tools can enhance presentation quality and efficiency but don't improve the underlying research quality; they help organise and communicate ideas more clearly rather than generating new insights.

Zendy at Charleston Conference Asia 2026: Supporting Research Access in Emerging Communities

In January 2026, Zendy will be heading to Charleston Conference Asia, taking place in Bangkok, Thailand. This marks the first-ever Asia edition of the Charleston Conference, an event long known for bringing librarians, publishers, and information professionals together for practical, honest conversations about scholarly communication. The Asia edition builds on that same spirit, while focusing on the realities, challenges, and opportunities shaping research access across the region. A space for real conversations about access and equity Charleston Conference Asia brings together voices from across libraries, publishing, and research support to explore how knowledge is discovered, accessed, and shared, particularly in emerging and underrepresented research communities. For Zendy, this aligns closely with our mission: supporting more equitable access to trusted scholarly content, while giving institutions practical tools that reflect how research is actually done today. Zendy session spotlight We’re proud to have Sara Crowley Vigneau, our Partnership Relations Manager, speaking at the conference on January 28 at 11:45 AM. Her session, “Empowering Early-Career Researchers in Emerging Research Communities,” explores inclusive models for improving access to research, looking beyond theory to what works in practice for institutions, libraries, and early-career scholars. Sara will be joined by a strong panel of speakers bringing perspectives from publishing and academic libraries: Alexandra Campbell, Senior Editor, Springer Nature Christopher Chan, University Librarian, Hong Kong Baptist University Mayasari Abdul Majid, Library Director, LSL Education Group Sdn. Bhd. Together, the session will examine how libraries, publishers, and platforms can better support researchers working within diverse institutional and regional contexts. Why this matters for libraries and institutions Early-career researchers often face barriers that go beyond funding or publishing, from limited access to content, to fragmented discovery tools, to language and regional constraints. Events like Charleston Conference Asia create space to address these challenges openly and collaboratively. Zendy’s participation reflects our ongoing focus on: Expanding access to trusted academic content Supporting libraries with flexible, cost-aware models Providing AI-assisted research tools built with transparency and integrity in mind Let’s connect in Bangkok If you’re attending Charleston Conference Asia 2026, we’d love to connect. Whether you’re a librarian, publisher, or research leader, this is a great opportunity to exchange ideas and explore how access, discovery, and responsible AI can better support research communities across Asia and beyond. To schedule a meeting with our team, please email us at info@zendy.io For full event details, visit Charleston Conference Asia. .wp-block-image img { max-width: 65% !important; margin-left: auto !important; margin-right: auto !important; }

What’s New in ZAIA: Our Biggest Update Yet

We’ve rolled out one of the biggest updates to Zendy AI Assistant, ZAIA. These improvements focus on making research faster, more intuitive, and far more reliable. Here’s what’s new. Inline References You Can Trust You no longer have to scroll, copy, or cross-check to figure out where an insight came from.ZAIA now adds references directly inside its responses. If ZAIA summarises a study, explains a concept, or pulls a fact from a paper, the source appears right there in the answer, clear, traceable, and easy to verify. It feels more like reading a well-cited academic explanation than an AI output. Ask in Any Language, Get Answers in the Same Language Whether you’re researching in Arabic, Spanish, French, or Swahili, ZAIA now responds in the language you use, automatically. There’s no need to switch tools, tweak settings, or use separate translators. Just ask your question in your preferred language, and ZAIA replies in the same one.It’s a smoother experience for multilingual classrooms, international research teams, and anyone working beyond English. Search Smarter with the SOLR Agent Sometimes you know the exact paper you need, you just don’t have the title, DOI, or permalink memorised.Now you don’t have to. ZAIA’s new SOLR Agent can search using: DOI Paper title A Zendy permalink Just drop in any of these, and ZAIA fetches the correct paper instantly. It’s especially useful when you’re deep in a literature review and need to track down sources without losing your rhythm. How does it work? SOLR is the search engine behind ZAIA’s paper search.It helps ZAIA find the exact research paper you’re looking for using clear identifiers like a DOI, paper title, or Zendy link. Instead of guessing keywords or scanning long result lists, SOLR matches your input directly to the right paper, fast and accurately. ZAIA Can Now Help With Your Zendy Account You can now ask ZAIA about your subscription, billing questions, or common FAQs directly inside the platform. No switching tabs.No waiting for replies.No digging through help pages. ZAIA now serves as a support companion as well as a research assistant, especially helpful when you want quick answers in the middle of studying or writing. A Clearer, More Transparent UI One of the most noticeable upgrades is the updated interface.You’ll now see clearer “thinking” steps that show how ZAIA processes your request. It’s more transparent, easier to follow, and gives you a better sense of how ZAIA arrives at each answer, something many researchers have been asking for. Why These Updates Matter Every improvement in this update has one goal: to make research smoother and more trustworthy.Whether you're analysing papers, fact-checking a claim, working across languages, or sorting out your subscription, ZAIA is now better at supporting the full research journey. And we’re not done.This update is part of a larger shift toward a more personal and adaptive ZAIA, one that learns from how each researcher works. For now, we hope these updates make your experience on Zendy faster, clearer, and more enjoyable. If you’d like to see these features in action, just open ZAIA, Zendy AI Assistant, and try them out. .wp-block-image img { max-width: 85% !important; margin-left: auto !important; margin-right: auto !important; }

Zendy and Casalini Libri Partner to Expand Access to European Humanities and AI Research Tools

Oxford, UK – Dec, 2025 – Zendy, the AI-powered research library, and Casalini Libri, one of the leading providers of European scholarly content and library services, have signed a partnership agreement to expand access to high-quality academic research in the humanities and social sciences. This strategic collaboration will make Casalini Libri’s extensive collection of European scholarly publications, including academic journals, monographs, and specialist research outputs, available on Zendy’s platform. The content will be discoverable through ZAIA, Zendy’s AI research assistant, helping librarians, researchers, students, and academic institutions worldwide find and engage with relevant European scholarship more efficiently. With over 800,000 users across 191 countries and territories, Zendy continues to grow as a trusted destination for discovering academic knowledge. By integrating Casalini Libri’s content, Zendy strengthens its mission to improve access to regionally significant scholarship and ensure that important European research is more visible, searchable, and widely used. Casalini Libri is internationally recognised for its deep expertise in European humanities and social sciences, with a particular focus on Southern Europe and broader continental scholarship. Through this partnership, these resources will reach a wider global audience, helping address long-standing discoverability challenges faced by non-English academic content. The collaboration also reflects Zendy’s commitment to linguistic and regional diversity in research. In a scholarly ecosystem where English-language publications dominate, increasing access to European research traditions is essential for a more balanced and representative academic landscape. Zendy users will now be able to explore Casalini Libri’s content seamlessly on the platform, supporting teaching, research, and interdisciplinary work rooted in European intellectual traditions. Together, Zendy and Casalini Libri aim to increase the global reach and impact of European scholarship. For more information, please contact:Lisette van KesselHead of MarketingEmail: l.vankessel@knowledgee.com About Zendy Zendy is an AI-powered, mission-driven, trustworthy research library dedicated to increasing the accessibility and discoverability of scholarly literature, particularly in the global south and underserved regions. The platform currently serves over 800,000 users across 200+ countries and territories, offering a comprehensive collection of academic journals, books, and reports to empower researchers, educators, and students. Zendy also provides AI tools, including its research assistant ZAIA, to help users read, analyse, and summarise academic content more efficiently. Website: https://zendy.io About Casalini Libri Casalini Libri is a leading supplier of European scholarly publications and library services, specialising in humanities and social sciences research. For decades, Casalini Libri has worked closely with publishers, libraries, and research institutions to curate, distribute, and preserve high-quality academic content, supporting the global exchange of European scholarship. .wp-block-image img { max-width: 85% !important; margin-left: auto !important; margin-right: auto !important; }

Address

John Eccles HouseRobert Robinson Avenue,

Oxford Science Park, Oxford

OX4 4GP, United Kingdom